It requires a lot of resources, especially GPU and GPU memory, to train a deep-learning model efficiently. Here I test the time it took to train a model in 3 computers/servers.

1. My own laptop.

CPU: Intel Core i7-7920HQ (Quad Core 3.10GHz, 4.10GHz Turbo, 8MB 45W, w/Intel HD Graphics 630

Memory: 64G

GPU: NVIDIA Quadro M1200 w/4GB GDDR5, 640 CUDA cores

2. AWS g2.2xlarge

CPU: 8 vCPU, High Frequency Intel Xeon E5-2670 (Sandy Bridge) Processors

Memory: 15G

GPU: 1 GPU, High-performance NVIDIA GPUs, each with 1,536 CUDA cores and 4GB of video memory

3. AWS g2.8xlarge

CPU: 32 vCPU, High Frequency Intel Xeon E5-2670 (Sandy Bridge) Processors

Memory: 60G

GPU: 4 GPU, High-performance NVIDIA GPUs, each with 1,536 CUDA cores and 4GB of video memory

The AMI. I used udacity-dl – ami-60f24d76 (The official AMI of Udacity’s Deep Learning Foundations) from the community AMIs.

Test script. Adopted from https://github.com/fchollet/keras/blob/master/examples/imdb_lstm.py

Time spent is tracked

import time

from keras.preprocessing import sequence

from keras.models import Sequential

from keras.layers import Dense, Embedding

from keras.layers import LSTM

from keras.datasets import imdb

'''Trains a LSTM on the IMDB sentiment classification task.

The dataset is actually too small for LSTM to be of any advantage

compared to simpler, much faster methods such as TF-IDF + LogReg.

Notes:

- RNNs are tricky. Choice of batch size is important,

choice of loss and optimizer is critical, etc.

Some configurations won't converge.

- LSTM loss decrease patterns during training can be quite different

from what you see with CNNs/MLPs/etc.

'''

start_time = time.time()

max_features = 20000

maxlen = 80 # cut texts after this number of words (among top max_features most common words)

batch_size = 32

print('Loading data...')

(x_train, y_train), (x_test, y_test) = imdb.load_data(num_words=max_features)

print(len(x_train), 'train sequences')

print(len(x_test), 'test sequences')

print('Pad sequences (samples x time)')

x_train = sequence.pad_sequences(x_train, maxlen=maxlen)

x_test = sequence.pad_sequences(x_test, maxlen=maxlen)

print('x_train shape:', x_train.shape)

print('x_test shape:', x_test.shape)

print('Build model...')

model = Sequential()

model.add(Embedding(max_features, 128))

model.add(LSTM(128, dropout=0.2, recurrent_dropout=0.2))

model.add(Dense(1, activation='sigmoid'))

# try using different optimizers and different optimizer configs

model.compile(loss='binary_crossentropy',

optimizer='adam',

metrics=['accuracy'])

print('Train...')

model.fit(x_train, y_train,

batch_size=batch_size,

epochs=5,

validation_data=(x_test, y_test))

score, acc = model.evaluate(x_test, y_test,

batch_size=batch_size)

print('Test score:', score)

print('Test accuracy:', acc)

time_taken = time.time() - start_time

print(time_taken)

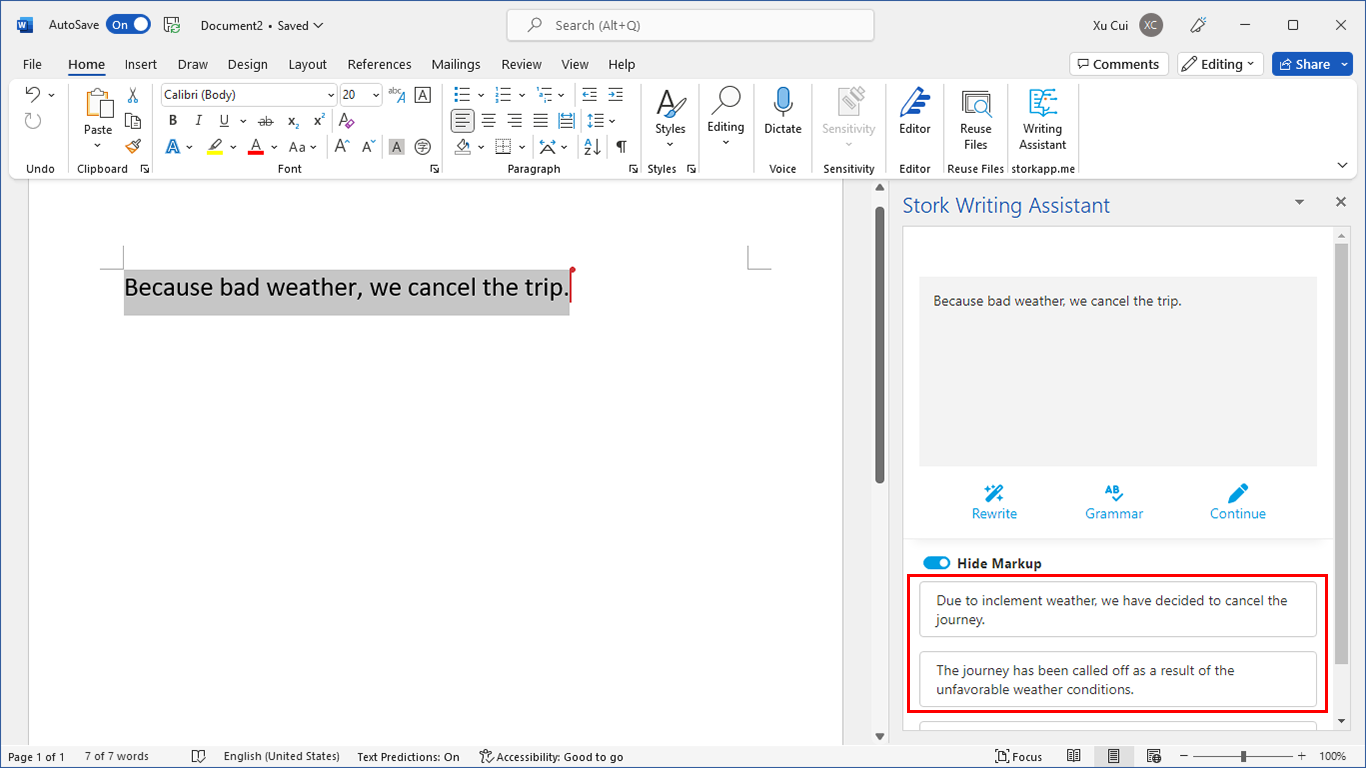

Result: The table below shows the number of seconds it took to run the above script at 3 sets of parameters (batch_size and LSTM size).

| batch_size | LSTM size | Laptop | g2.2xlarge | g2.8xlarge |

| 32 | 128 | 546 | 821 | 878 |

| 256 | 256 | 155 | 152 | 157 |

| 1024 | 256 | 125 | 107 | 110 |

The result is surprising and confusing to me. I was expecting g2 servers to be much much faster than my own laptop given the capacity of the GPU. But the result shows my laptop is actually faster in smaller parameter values, and only slightly worse in higher parameter values.

I do not know what is going on … Anybody has clue?

[update 2017-04-23]

I was thinking maybe the operating system or some configuration was optimal in AWS. The AMI I used was udacity-dl – ami-60f24d76 (The official AMI of Udacity’s Deep Learning Foundations) from the community AMIs. So I tried a different AMI, a commercial AMI from bitfusion: https://aws.amazon.com/marketplace/pp/B01EYKBEQ0 Maybe it will make a difference? I also tested a new instant type p2.xlarge which has 1 NVIDIA K80 GPU (24G GPU memory) and 60G memory.

| batch_size | LSTM size | Laptop | g2.2xlarge | g2.8xlarge | p2.xlarge |

| 1024 | 256 | 125 | 151 | 148 | 101 |

The result is still disappointing. The AWS g2 instances perform worse than my laptop, and p2 instance only 20% better.

(Of course, GPU is still faster than CPU. On my own laptop, using GPU is ~10x faster than using CPU to run the above code)

[update 2017-04-02]

I checked out Paperspace’s p5000 computer. It comes with a dedicated p5000 GPU with 2048 cores and 16G GPU memory. I tested with the same code. I find the training is much faster on p5000 (ironically, the data downloading part is slow). The training part is 4x faster than my laptop.

| batch_size | LSTM size | Laptop | g2.2xlarge | g2.8xlarge | p2.xlarge | paperspace p5000 |

| 1024 | 256 | 125 | 151 | 148 | 101 | 50 |

(Note, the above time includes the data downloading part, which is about 25 seconds).

Paperspace p5000 wins so far!

[update 2017-05-03]

I purchased Nvidia’s 1080 Ti and installed it on my desktop. It has 3,584 cores and 11G GPU memory. It look 7s for this GPU to train 1 epoch of the above script and it’s 3x times faster than my laptop.

| batch_size | LSTM size | Laptop (1 epoch) | 1080 Ti (1 epoch) |

| 1024 | 256 | 21 | 7 |

The result of your speed test also may be influenced by operating system of computer/server, I think, it depends on how do OS process that code you wrote (c, python, matlab, etc).

@zhouyl

Thank you for your thought!

I’m having similar experience with AWS and Tensorflow. I’m trying to run examples from this excellent lecture: https://www.youtube.com/watch?v=u4alGiomYP4 (materials are here: https://codelabs.developers.google.com/codelabs/cloud-tensorflow-mnist/#0) My desktop doesn’t have any GPU, but it’s running the examples 2-2.5 faster than p2-xlarge AWS instance. Definitely I’m doing something wrong (I’m pretty new to AWS and Tensorflow), but I can’t see it. I’ll be watching this post to see if you found any solution.

Thanks!

Pavel